Binding Language Models in Symbolic Languages

We were joined by the authors Zhoujun and Tianbao. They raised three potential discussion points:

- Extension of Binder to structured data (programming languages, datasets)

- NL for code vs. code for NL

- Synthesizing code but with NL subtasks in them

- Incorporating human feedback

First, we addressed some clarification questions about how the method works

- Essentially 1) Codex in Codex + 2) prompting + 3) manual parsing

Then, we discussed Binder for real world languages

- This work relies on the fact that there is a clear separation between synthesizing the query and solving the “knowledge subtask.” This distinction isn’t as clear for real code.

- Nadav noticed that Copilot often uses functions that aren’t defined in the code

- – Clarification by Nadav: In my experience, Codex can reason about calls to unimplemented/undefined functions.

- This suggests a potential “hierarchical synthesis” approach: first, synthesize a high level skeleton (perhaps containing unimplemented functions). Then, synthesize the pieces of the skeleton that are more abstract. Repeat until you get a complete program.

We talked about LLM’s for code

- Autoregressive = bad

- What if you train a LLM on AST’s with holes?

- Kavi mentioned that asking LLM to show work first before prompting is better than prompting and then showing work (reference?)

One point raised is that DreamCoder could be integrated in this process:

- The hard part is figuring out what the abstractions/subtasks should be.

- Can we progressively learn higher level abstractions for binder-like programs with DreamCoder?

- In general, what’s the best way to use DreamCoder with LLM’s?

There was a brief digression about what it means to have a Binder language

- Binder-SQL and Binder-Python are essentially the same, but prompted differently.

- SQL version does better than Python version

- You can make other Binder languages too

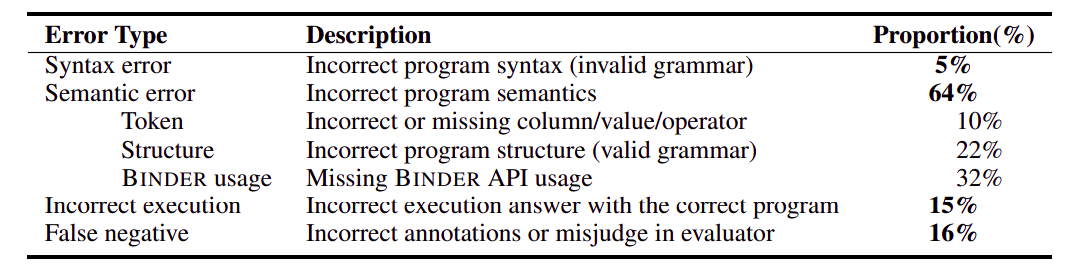

We also talked about error cases a bit

- Could telling Codex the error and asking it to correct itself help?

Other notes:

- Theo thinks benefit of this approach is that you’re not forcing Codex to generate everything in one go, rather that you’re abstracting away difficult subtasks. Then, when you go back and synthesize the subtasks one by one, you’re focusing on one simple task at a time.

- Can retrieval help this?

- They tried on TabFact, it helps a little

- What do the metrics look like if we use pass@k instead of majority vote?